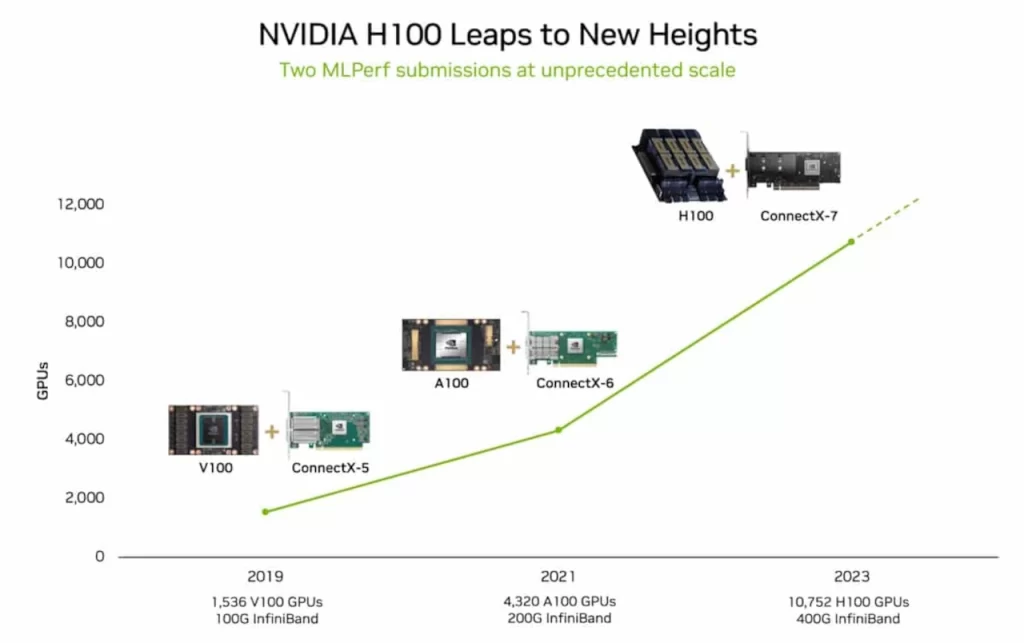

The results of the industry-standard MLPerf training v3.1 benchmark for training AI models were released by MLCommons on November 8. NVIDIA Eos, an artificial intelligence supercomputer powered by a staggering 10,752 NVIDIA H100 Tensor Core GPUs and NVIDIA Quantum-2 InfiniBand networking, accomplished in just 3.9 minutes a training benchmark using a GPT-3 model with 175 billion parameters and one billion tokens. This is a nearly threefold improvement over the 10.9-minute benchmark set by NVIDIA less than six months ago when the test was first introduced.

Extrapolating from a subset of the complete GPT-3 dataset used by ChatGPT service, the benchmark indicates that Eos could now complete the training process in a mere eight days, which is 73 times faster than the previous state-of-the-art system that used 512 A100 GPUs.

Generative AI must accommodate efficient scalability because LLMs increase annually by an order of magnitude. In upcoming MLPerf benchmarks, the industry can anticipate further advancements in AI performance due to software updates and optimisations.

At the same time, Intel reported that by incorporating the FP8 data type into the v3.1 training GPT-3 benchmark, Intel Gaudi2 achieved a twofold performance increase. In contrast to the June MLPerf benchmark, the training process was completed in 153.58 minutes using 384 Intel Gaudi2 accelerators, a reduction of more than half. FP8 is supported in both E5M2 and E4M3 formats by the Gaudi2 accelerator, providing the option for delayed scaling if required.

Using BF16, Intel Gaudi2 executed training on the Stable Diffusion multi-modal model utilising 64 accelerators in 20.2 minutes. For forthcoming MLPerf training benchmarks, the efficacy of Stable Diffusion will be evaluated using the FP8 data type.

BERT and ResNet-50 achieved benchmark times of 13.27 and 15.92 minutes, respectively, when executed on eight Intel Gaudi2 accelerators with BF16.

Due to its price-to-performance ratio, Gaudi2 remains the sole substitute for NVIDIA’s H100 in AI computation, says Intel.

MLPerf HPC

A distinct benchmark for AI-assisted simulations on supercomputers, MLPerf HPC, H100 GPUs achieved performance levels up to twice as high as NVIDIA A100 Tensor Core GPUs in the previous HPC round. The outcomes exhibited advances up to 16 times since the initial MLPerf HPC round in 2019.

ASUSTek, Ailiverse, Azure, Azure+NVIDIA, CTuning, Clemson University Research Computing and Data, Dell, Fujitsu, GigaComputing, Google, Intel+Habana Labs, Lenovo, Krai, NVIDIA, NVIDIA+CoreWeave, Quanta Cloud Technology, Supermicro+Red Hat, Supermicro, and xFusion are among the 19 organisations that have contributed performance results to MLPerf Training v3.1. Red Hat, Ailiverse, CTuning Foundation, and Clemson University Research Computing and Data were in attendance for the first time.

MLPerf HPC v3.0 Benchmarks

A new test was incorporated into the benchmark to train OpenFold, a model that generates three-dimensional protein structures from amino acid sequences. OpenFold enables healthcare researchers to complete critical tasks in minutes that previously required weeks or months.

Understanding the structure of a protein is crucial for rapidly developing effective pharmaceuticals, as the vast majority of medications act on proteins, the cellular machinery that regulates numerous biological processes.

During the MLPerf HPC test, OpenFold was trained on H100 GPUs in 7.5 minutes. The OpenFold examination is emblematic of the eleven-day, 128-accelerator AlphaFold training procedure completed two years ago.

NVIDIA BioNeMo, a platform for generative AI in drug discovery, will integrate an upcoming release of the OpenFold model and the training software used by NVIDIA.

MLPerf HPC v3.0 comprises more than thirty outcomes, reflecting a participation surge of 50% compared to the previous year. Eight organisations received submissions, including Clemson University Research Computing and Data, Texas Advanced Computing Centre, Dell, Fujitsu+RIKEN, HPE+Lawrence Berkeley National Laboratory, NVIDIA, and HPE+Lawrence Berkeley National Laboratory. HPE+Lawrence Berkeley National Laboratory and Clemson University Research Computing and Data submitted their data for the first time.

Five organisations have contributed to developing the new OpenFold benchmark: Texas Advanced Computing Centre, HPE+Lawrence Berkeley National Laboratory, Clemson University Research Computing and Data, and NVIDIA.

The significant advancements in AI for science showcased in the MLPerf HPC benchmark suite will aid discoveries. An instance of this is the fourteenfold increase in speed of the DeepCAM weather modelling benchmark since its initial release. This demonstrates how swift advancements in machine learning systems can give scientists superior resources to tackle crucial research domains and further world comprehension.